Moote points: Why innovation, trust, and the shifting boundaries of content creation are future-proofing media

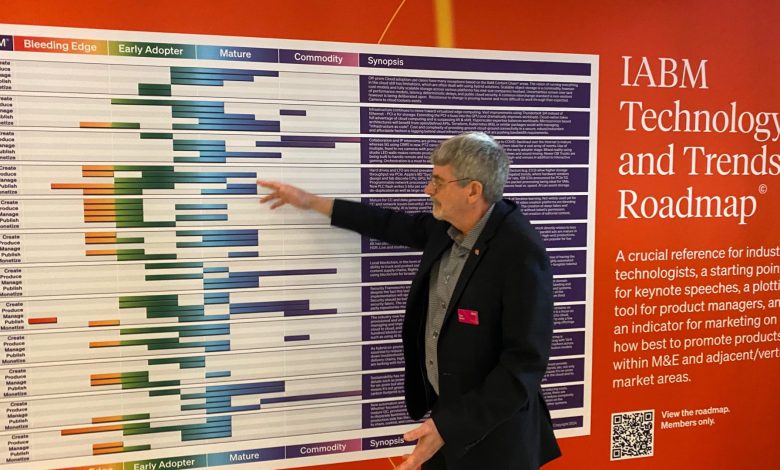

Every year, the IABM Technology & Trends Roadmap provides a visual representation of the lifecycle of business initiatives, particularly within the media and entertainment (M&E) industry, highlighting key technologies and their adoption curves.

To find out the pearls of wisdom the IABM Technology and Trends Roadmap 2025 has to offer broadcasters in 2025 and beyond, APB+ sat down for an exclusive chat with Stan Moote, CTO of IABM.

Collaboration was a strong theme last year: How is that evolving now that end-to-end security and Zero Trust are crucial? Are there tensions between openness and protection?

Stan Moote: Collaboration across media supply chains is more critical than ever, but it is increasingly shaped by the demands of Zero Trust (ZT) architecture and end-to-end content security.

As studios, post houses, cloud vendors, and freelancers work across geographies, the old perimeter-based security model no longer holds. Instead, we are seeing widespread adoption of ZT principles — where every user, device, and service must be continuously authenticated, authorised, and validated before accessing content or systems.

Viewing this as tension between opening and protection is a flawed perspective. Instead, we should recognise that adequate protection enables collaboration and openness by removing threats. This mindset fosters the development of integrated, collaborative tools and ultimately leads to more efficient workflows built on best-of-breed technologies.

The IABM Control Plane Working Group had extensive discussions around ZT in the context of control workflows, where immediate access and real-time feedback, such as when adjusting levels, are critical requirements.

In our collaboration with SMPTE on the ST 2138 Catena protocol, we aimed to ensure that the design aligns with ZT principles. To support a forward-looking ZT-compliant architecture, we implemented OAuth2 to attach an access token to each request.

These tokens are then evaluated by policy enforcement points situated between the client and the enterprise resource, ensuring that access decisions are dynamically and contextually validated.

The key is building forward-looking “secure-by-design” collaboration stacks, where openness no longer means uncontrolled access, but rather interoperability among systems that enforce strong, transparent, and auditable controls. The future of media collaboration is not about being less open — it is about being selectively open and verifiably secure.

It is also important to highlight how the rise of AI tools further intensifies the need for strong security. Many AI-powered workflows require ingesting sensitive assets into external models, raising concerns about content provenance, data leakage, and model training compliance.

In response, M&E organisations are increasingly embedding ZT-compliant guardrails into creative tools themselves, and not just at the network layer.

Can you review how the industry has approached tangible sustainability over the past 12 months — specifically, what are the measurable metrics broadcasters are tracking, and how are they reporting on them?

Moote: I reached out to Barbara Lange, Founder & CEO of Kibo, a company focused on sustainability in the media technology industry, for her perspective on this topic. Barbara also contributes to the IABM Technology & Trends Roadmap, specifically around sustainability. Having worked with her during her 12-year tenure as Executive Director of SMPTE, I know she remains deeply connected to the M&E industry.

She shared this insight: “As someone watching sustainability in media-tech, I’ve seen encouraging signs of progress, but we’re far from widespread, consistent reporting. The challenge now is to move from isolated pilots to industry-wide accountability, with shared metrics, transparent disclosures, and a clear path from intention to impact.”

From Moote’s perspective, the media technology and broadcast industry has made measured progress toward more tangible sustainability practices over the past 12 months. However, this progress remains uneven and largely incremental. That said, some MediaTech companies have prioritised environmental sustainability for several years, and have taken steps to publish detailed reporting, primarily focused on Scope 1 and 2 emissions.

(Scope 1 refers to direct emissions from a company’s operations, such as manufacturing or service delivery. Scope 2 covers indirect emissions from purchased energy often mitigated using on-premises renewable sources like solar.)

Increasingly, broadcasters and media tech vendors are beginning to track emissions from production activities, facilities, and transmission infrastructure. However, full Scope 3 emissions such as those from content delivery networks, viewer device usage, and production supply chains remain largely unquantified.

Overall, the adoption of standardised emissions reporting metrics is still limited, and while many organisations are making climate-related commitments, the translation of these pledges into actionable, reportable outcomes is still in its early stages.

Cross-industry initiatives are helping to build momentum around sustainable practices in media technology. Efforts such as Greening of Streaming and the Media Tech Sustainability Series are actively shaping best practices, fostering cross-sector dialogue, and promoting measurable impact across the content supply chain. These initiatives are complemented by the work of industry working groups within organisations like EBU, ABU, ATSC, IABM, NABA, and SMPTE, to name but a few.

One tangible example from a project I worked on with Greening of Streaming is the Power Factor initiative. In this case, a participating data centre identified Power Factor as a significant issue. By activating monitoring and optimising it, they were able to reduce their total power draw by nearly 15%, all the while maintaining the same computing capacity.

To illustrate this further, consider a single network router. At full capacity, it demonstrated an excellent Power Factor of 0.95 or better, meaning it used electricity very efficiently. However, under light loads, its Power Factor dropped significantly to 0.6 or worse.

This means a router rated for 500 watts might require nearly twice that much power generation during low-traffic periods due to the inefficiency introduced by poor Power Factor.

For context, a Power Factor of 1.0 indicates perfect efficiency. In contrast, a Power Factor of 0.5 means twice as much power needs to be generated compared to what the equipment consumes.

On the consumer side, TV sets show even more dramatic variations in Power Factor. For example, when displaying bright, dynamic content, some TVs maintain a Power Factor around 0.9, indicating efficient power use. However, in standby mode, the Power Factor can drop as low as 0.1.

The bottom line is that the industry still lacks consistent and comparable benchmarks for sustainability. Regulatory drivers, such as the EU’s Corporate Sustainability Reporting Directive (CSRD), are starting to drive more formal ESG reporting, especially among Europe-based media groups. However, without industry-wide mandates or stronger market incentives, sustainability efforts risk remaining fragmented, inconsistent, and largely voluntary.

With millions using tools like CapCut, where do you see the biggest overlap between creator-grade and broadcast-grade ecosystems, and should there be concerns about this convergence?

Moote: This excellent question highlights a critical shift underway in the media and entertainment industry: the technological and creative convergence between creator-grade and broadcast-grade ecosystems.

The convergence is most evident in how tools like CapCut, DaVinci Resolve, Runway, and Adobe Premiere Pro (with Firefly integration) are closing the gap between amateur and professional content creation. What was once the exclusive domain of post houses and specialised suites — high-resolution editing, motion graphics, and keyframe animation — is now accessible via cloud-native, GPU-accelerated pipelines.

These platforms now include AI models for tasks like background removal, lip-syncing, voice cloning, and style transfer, along with pre-trained machine learning (ML) effects such as auto-beat sync, shot matching, and AI-driven, text-based video editing. As a result, creators can achieve production quality that previously required specialist editing and VFX teams, hence dramatically compressing timelines.

While broadcast-grade systems are governed by strict technical standards such as SMPTE ST 2110, CCIR 601, Rec. 709/2020 colour spaces, and EBU R128 loudness standards, many of these tools export content in widely accepted formats like H.264, ProRes, and HEVC, adhering to industry-standard resolutions and bitrates. Some even offer advanced meta-tagging capabilities, in some cases surpassing the metadata sophistication found in many traditional broadcast operations.

I do have concerns about Quality Control (QC). Working within the EBU QC Data Model group, we have focused on ensuring QC data travels alongside the content throughout its entire lifecycle. However, workflow fragmentation, where creator tools often operate in silos or within proprietary cloud environments, complicates interoperability and version control when creator-originated assets are integrated into broadcast workflows.

Regarding misinformation, AI-generated or edited content may meet technical specifications yet still violate editorial standards. Examples include deepfakes, synthetic B-roll, or undisclosed generative elements, which pose significant challenges for content integrity and trust.

Have we reached a point where the aesthetic of “broadcast-quality” is no longer a clear differentiator? I may have golden eyes, but during the COVID period, pretty much any new content, regardless of format or quality, was accepted by viewers. That said, with 4K and 8K sets, viewers tend to avoid poor-quality content within the home environment, showing that quality still matters.

Broadcast networks now must implement automated rights tracking and provenance systems (such as C2PA) to manage ownership and usage. In contrast, generative assets like music, voice, and visuals produced by creators often introduce rights management uncertainties. This poses critical challenges for downstream syndication, monetisation, and international compliance.

Ultimately, the divide between creators and broadcasters is becoming less about creativity and more about procedural and technical differences. The real question is whether this fast-evolving creator landscape can continue to grow while effectively addressing the legal and compliance challenges that traditional broadcasters have long been held to.

With AI-powered analytics and churn management now mainstream, what changes have you observed and is anyone getting left behind?

Moote: Absolutely, there are still some areas where the industry is playing catch-up. A prime example is Predictive Churn Modelling, where streaming platforms leverage AI and ML to predict when a subscriber might cancel their service. Typical indicators include a decrease in viewing time, billing issues, frequent pausing, and ignoring trailer bumpers or new release notifications.

Media companies that can collect and analyse their audience data are clearly ahead of the game, as they can engage subscribers directly using tailored tactics, from offering discounts to recommending new content aligned with viewer preferences.

By contrast, many traditional broadcasters and cable networks lack this granular viewer data. Even ad-driven broadcasters face similar challenges: losing viewers means lowers ratings, which is akin to losing subscribers. This is exactly why advertisers need to think differently, moving beyond broad demographic targeting toward customised, personalised ads that resonate more deeply with individual viewers.

One of the fastest growing sectors in streaming is niche content, whether highly localised or indie productions. These creators often cannot afford churn platforms and usually do not have enough subscriber data to generate predictive insights or develop targeted retention strategies. Instead, they rely on understanding audience dynamics through “likes”, comments, and social media feedback, reacting quickly with new and exhilarating content to keep their viewers engaged.

Similarly, single live event productions fall into this category, relying primarily on immediate audience responses from social channels rather than predictive analytics.

Are there any moot points or future-looking thoughts that you would like to share?

Moote: We have already touched on the creator community, which is often seen as a disruptive force in the media landscape. However, broadcasters who choose to engage with this community are beginning to uncover real opportunities for hybrid models and collaborative ventures that extend reach and boost audience engagement.

According to IABM research, innovation and a clear future roadmap continue to be the primary drivers of investment in our industry. This innovation must extend beyond traditional production technologies to include Business Technology — tools and systems that support new business models, drive operational efficiency, and ultimately improve profitability.

Looking ahead, the companies most likely to thrive will be those that can adapt quickly, form creative partnerships, and invest not only in compelling content, but also in the technologies that power its creation, distribution, and monetisation.

Article first published at APB News.